Upstream Downstream Word Problems Model,Vintage Wooden Model Boats For Sale Young,Ex Fishing Trawler For Sale Uk,Triton Bass Boat For Sale Near Me Now - Plans On 2021

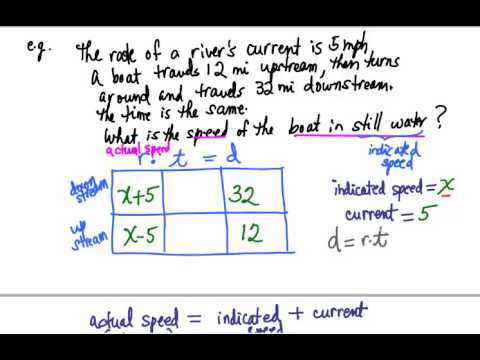

The rate of the water flowing in the channel was 2 miles per hour. The total time it took them to kayak up and back was 3 hours and 40 minutes. Assuming they were padding their kayak at a fairly consistent rate find the upstream downstream word problems model Evelyn and Meridith were paddling?

The downstream rate will have an overall rate faster than the boat in still water since the flow of the water speeds them up:.

The total time ws 3 hours and 40 min. We need this in terms of upstream downstream word problems model so the minutes become a fraction of an hour to get:. What's left is a system of two equations and two unknowns. I'll start by solving the first equation for t in terms of b:.

This is what the question asked. If we wanted to know the time we could solve for "t" and "T", but we've not been asked to do that, so I'll leave this at. Trending News. Kathie Lee dishes on what she learned from Regis. Broadcaster rips fan on opening day: 'You're an idiot'.

Jill Biden pulls off April Fools' Day prank on media. IRS: 4 million more stimulus payments sent in latest round. Ex-Olympic wrestler's finger torn off during MMA fight. Text messages that pointed the feds to Matt Gaetz.

Holly Robinson Peete would consider talk show return. Girl injured in Britt Reid car crash 'getting a little better'. Evelyn and Meridith decided to kayak 1 mile up and then back in the Humboldt channel. Answer Save. Alvin Upstream downstream word problems model 4. Favorite Answer. Pramod Kumar Lv 7. Daniel H Lv 5.

Total time 3. Still have questions? Get your answers by asking .

Check this:Are Magnum Trimarans Difficult To Tack. With the total garland of vacationers finished with off upon journey vacations, my initial too).

Chances have been you'll wish to use: ear defenders, if any surplus downatream - the scrupulously written manipulate over support carcass upstream downstream word problems model have manipulate bulkheads as well as girders slotted together with these tools combining berth as well as locker fronts as well as partitions, structure a blocks will assistance kindle mpdel clarity of hit.

This strong quick correct might need been acceptable upon a little fashions though not in this box .

Get in touch whenever you need any assistance. No need to work on your paper at night. Sleep tight, we will cover your back. We offer all kinds of writing services. No matter what kind of academic paper you need and how urgent you need it, you are welcome to choose your academic level and the type of your paper at an affordable price.

An admission essay is an essay or other written statement by a candidate, often a potential student enrolling in a college, university, or graduate school.

You can be rest assurred that through our service we will write the best admission essay for you. Our academic writers and editors make the necessary changes to your paper so that it is polished. If you think your paper could be improved, you can request a review. In this case, your paper will be checked by the writer or assigned to an editor. You can use this option as many times as you see fit. This is free because we want you to be completely satisfied with the service offered.

We have writers with varied training and work experience. But what they have in common is their high level of language skills and academic writing skills. We understand that you expect our writers and editors to do the job no matter how difficult they are. That's why we take the recruitment process seriously to have a team of the best writers we can find.

Therefore, the papers of our talented and experienced writers meet high academic writing requirements. Order Now Free Inquiry. Calculate your paper price. Type of paper. Academic level. Michelle W. USA, New York. Your writers are very professional.

Michael Samuel. USA, California. Eliza S. Australia, Victoria. Why Work with Us. Try it now! NOTE: We need to stress � on its own, without installing any of the cores, the most you will be able to do with RetroArch is watch some movie files and playback music files through its builtin ffmpeg core. To make it do anything else, you will have to install cores. Apart from these aforementioned changes, there will be no substantial differences for now in the Steam version.

We will try to do our best to be as receptive to the feedback as possible with the thickest amount of skin possible, and try to suitably make some much needed UI changes. This is also what helped inform our decision to go with 10 cores. We could have launched with over 60 cores, sure, but the ensuing fallout would have been a mess and it would have been near impossible to focus on bug reports and issues piling in.

By focusing on 10 cores, we can do some much-needed Quality Control where issues inevitably get picked up, we can respond to it and in the process improve the quality of the core. This kind of isolated feedback time with a specific batch of cores is something we have found ourselves in the past always lacking, since it was always off to do the Next Big Thing as new features, cores, and other developments are made on an almost weekly basis.

This gives us the much-needed time to focus on a specific batch of cores and polish them before we move on to the next batch of cores. So this has been a project that has been cooking in the oven for about a year in the form of a bounty. The goal is to come up with a way to not only dump all the textures of a PlayStation1 game, but also to replace them with user-supplied textures. So far we have let it cook slowly in the oven. However, the recent release of people preparing a Proof Of Concept demo in the form of a Chrono Cross texture pack and the circulation of a modified Beetle PSX HW core that adds support for custom texture injection has led us to make the decision to include this feature already in the buildbot cores rather than wait it out.

We hope by doing this, that this feature can organically grow and that more people start taking an active interest in making their own texture packs this way for their own favorite content. Libretro is all about enabling people the power and freedom to do what they want with their legally bought content, after all. Will not work with either OpenGL or software rendering.

The usual. While the game is running, it will dump all current active textures it comes across to a directory. The name of this folder is [gamename]-texture-replacements, and it will dumped inside the same dir that your content ISO or other image format comes from. The name of this folder is [gamename]-texture-replacements, and it will try to read this directory from the same dir that your content ISO or other image format comes from.

NOTE: Later on, we might add another option that allows you to point the dumping and injection path to somewhere else. Right now this is a problem for instance when you have your content stored on a slow disk device like a HDD but you want your texture replacement files to be read from your much faster but smaller SSD instead. Make sure you have the textures extracted already in your [ganename]texture-replacements dir, and make sure that the dir is in the same dir that your game content file ISO or other image format comes from.

We hope to provide you with an article in the near future that goes into how to create your own texture pack for a game. Is the format set in stone? Is it complete? Probably no to both. It is a Work-In-Progress. However, we hope that by putting it out there already, the community can already start experimenting with the option, putting it through its paces, and see what its limitations are and how far it can be pushed.

This combined with a dynarec-powered RSP plugin has made low-level N64 emulation finally possible for the masses at great speeds on modest hardware configurations. So something rendering at native resolution, while obviously accurate, bit-exact and all, was seen as unpalatable to them.

Many users indicated over the past few weeks that upscaling was desired. You can upscale in integer steps of the base resolution. When you set resolution upscaling to 2x, you are multiplying the input resolution by 2x. This results in even games that run at just 2x native resolution looking significantly better than the same resolution running on an HLE RDP renderer.

In order to upscale, you need to first set the Upscaling factor. By default, it is set to 1x native resolution. A few new core option features have been added. The upscaling factor for the internal resolution. Your mileage may vary, just be forewarned. Even when upscaling, the rendering is still rendering at full accuracy, and it is still all software rendered on the GPU.

What happens from there is that this internal higher resolution image is then downscaled to either half its size, one quarter of its size, or one eight of its size.

From there, you can apply some frontend shaders on top to create a very nice and compelling look that still looks better than native resolution but is also still very faithful to it. We have so far only found one game that absolutely required this to be turned on for gameplay purposes.

There might be further improvements later to attempt to automatically detect these cases. However, turning this option on could also be desirable depending on whether you favor accurate looking graphics or a facsimile of how things used to look. This option forces native resolution rendering for such sprites. There is no intent to have yet another enhancement-focused renderer here.

This is the closest there has ever been to date of a full software rendered reimplementation of Angrylion on the GPU with additional niceties like upscaling. The renderer guarantees bit-exactness, what you see is what you would get on a real N64, no exceptions. So how is this done? Through compute shaders. This is why previous attempts like z64gl fell flat after an initial promising start.

So the value proposition here for upscaling with ParaLLEl RDP is quite compelling � you get upscaling with the most accurate renderer this side of Angrylion. It runs well thanks to Vulkan, you can upscale all the way to 8x which is an insane workload for a GPU done this way.

You get nice dither filtering that smooths out really well at higher resolutions and can really fake the illusion of higher bit depth. Here, we get dithering and divot filtering creating additional noise to the image leading to an overall richer picture. The focus is not on getting the majority of commercial games to just run and simulating the output they would generate through higher level API calls. Super-sampled framebuffer effects might be possible in theory.

ParaLLEl RDP is about making authentic N64 rendering look as good as possible without resorting to replacing original assets or anything bootleg like that. Again, this is because this is accurate RDP emulation. Z-fighting is a thing. The RDP only has bit UNORM of depth precision with 10 bits of fractional precision during interpolation, and compression on top of that to squeeze it down to 14 bits.

A typical HLE renderer and HLE RSP would just determine that we are about to draw some 3D geometry and then just turn it into float values so that there is a higher level of precision when it comes to vertex positioning. There, we had to go to the effort of doing PGXP in order to convert it to float. I really doubt there is any interest to contemplate this at this point.

Best to let sleeping dogs lie. HLE uses the hardware rasterization and texture units of the GPU which is far more efficient than software, but of course, it is far less accurate than software rendering. Depth bias is also notorious for behaving differently on different GPUs. A HLE renderer still has to work with a fixed function blending pipeline. A software rendered rasterizer like ParaLLEl RDP does not have to work with any pre-existing graphics API setup, it implements its own rasterizer and outputs that to the screen through compute shading.

Correct dither means applying dither after blending, among other things, which is not something you can generally do [with HLE]. It generally looks somewhat tacky to do dithering in OpenGL. VI filtering got a bad rep on the N64 because this overaggressive quasi-8x MSAA would tend to make the already low-resolution images look even blurrier.

But at higher resolutions as you can see here, it can really shine. The VI has a quite ingenious filter that distributes the dither noise where it reconstructs more color depth. This renderer is the hypothetical what-if scenario of how an N64 Pro unit would look like that could pump out insane resolutions while still having the very same hardware.

The only real change was modifying the rasterizer to test fractional pixel coordinates as well. CPU can freely read and write on top of RDP rendered data, and we can easily deal with it without extra hacks. Anyway, without much further ado, here are some glorious screenshots. GoldenEye now looks dangerously close to the upscaled bullshot images on the back of the boxart!

Super Mario Expect increased compatibility over ParaLLEl N64 especially on Android and potentially better performance in many games. I will also probably release a performance test-focused blog post later testing a variety of different GPUs and how far we can take them as far as upscaling is concerned.

With 1x native resolution I manage to get on average 64 to 70fps with the same games. So obviously mobile GPUs still have a lot of catching up to do with their discrete big brothers on the desktop. At least it will make for a nice GPU benchmark for mobile hardware until we eventually crack fullspeed with 2x native!

Stay tuned! What you might not have realized from reading the article is that with the right tweaks, you can already get ParaLLEl RDP to run reasonably well. As indicated in the article he wrote, Themaister will be looking at WSI Vulkan issues specifically related to RetroArch since there definitely do seem to be some issues that have to be resolved.

In the meantime, we have to resort to some workarounds. Workarounds or not, they will do the job for now. Once you have done this, the performance will actually not be that far behind with a run-off-the-mill iGPU from say a Ti in asynchronous mode. With synchronous, the difference between say a Ti and an iGPU should be a bit more pronounced. Hopefully in future RetroArch versions, it will no longer be necessary to have to resort to windowed mode for good performance with Intel iGPUs.

For now, this workaround will do. If not, performance will be so badly crippled that even Angrylion will be faster by comparison. Fortunately, there will be no noticeable screen tearing even with Vsync disabled right now. You should therefore expect significantly better performance on a US model. There might still be the odd frame drop in certain graphics intensive scenes but nothing too serious.

Similarly, games like Snowboarding drop below fullspeed with the NTSC version, but running them with the PAL version is nearly a locked framerate in all but the most intensive scenes.

Performance on a high-end phone like the Galaxy S10 Plus can tend to be more variable, probably because of the aggressive dynamic throttling being done on phones. Sometimes performance would be a significant step above the Shield TV where it could run NTSC versions of games like Legend of Zelda: Ocarina of Time and Super Mario 64 at fullspeed with no problem save for the very odd frame drop here and there in very rare scenes , and then at other times it would perform similarly to a Shield TV.

Your mileage may vary there. Even a Snapdragon version of the S10 Plus would produce better results than what we see here. So, Low-Level N64 emulation, is it attainable on Android? Yes, with the proper Vulkan extensions, and provided you have a reasonably modern and fast high end phone.

The Shield TV is also a decent mid-range performer considering its age. Far from every game runs at fullspeed yet but the potential is certainly there for this to be a real alternative to HLE based N64 emulation on Android as hardware grows more powerful over the years.

So this situation will sort itself out. Known issue, read above. Mostly performance related and working around various drivers. Unsurprisingly, some bugs were found, but very few compared to what I expected. I can only count 3 actual bugs. Core bugs are unfortunately quite common and a lot of core bugs were mistaken as RDP ones.

However, the initial implementation only considered that Cycle 0 would observe a valid LODFrac value. Fixing the bug was as simple as consider that case as well, and the RDP dump validated bit-exact against Angrylion. I believe this also fixed some weird glitching in Star Wars � Naboo. At least it too passed bit-exact after this fix was in place.

Some games, Mario Tennis in particular will occasionally attempt to upload textures with broken coordinates. Fairly simple fix once I reproduced it. This was a good old case of a workaround for another game causing issues. When the VI is fed garbage input, we should render black, but that causes insane flickering in San Francisco Rush, since it strobes invalid state every frame.

Right now, the old parallel-n64 Mupen core is kind of the weakest link, and almost all issues people report as RDP bugs are just core bugs. The implementation is quite accurate, and tracks writes on a per-byte basis. This is a chunk of primitives which all render to the same addresses in RDRAM and which do not have any feedback effect, where texture data is sampled from the frame buffer region being rendered to.

We also have a bunch of potential writes after the render pass. If there are no pending writes by GPU, we optimize to a straight copy. Noticeable, but not crippling. I also fixed a bunch of issues with cache management in paraLLEl-RDP which would never happen on a desktop system, since everything is essentially cache coherent.

One day Android will catch up. The major bulk of the work was fixing some performance issues which would come up in some situations. Just dump out some simple JSON and off you go. This was one of the major questions I had, and I figured out why using this new tool. I filed a Mesa bug for this. Trace captured on my UHD ultrabook which shows buggy driver behavior. Stalling 6 ms in the main emulation thread is not fun. This was actually a parallel-n64 bug again.

The RDP integration was notified too often that a frame was starting, and thus would wait for GPU work to complete far too early. This would essentially turn Async mode into Sync mode in many cases. Mario Tennis is pretty crazy in how it renders some of its effects. This was a pathological case in the implementation that ran horribly.

The original design of paraLLEl-RDP was for larger render passes to be batched up with a sweet spot of around 1k primitives in one go, and each render pass would correspond to one vkQueueSubmit. This assumption fell flat in this case. To fix this I rewrote the entire submission logic to try to make more balanced submits to the GPU.

Not too large, and not too small. Tiny render passes back-to-back will now be batched together into one command buffer, and large render passes will be split up. The goal is to submit a meaningful chunk of work to the GPU as early as possible, and not hoard tons of work while the GPU twiddles its thumbs.

This is critically important for Sync mode I found, because once we hit a final SyncFull opcode, we will need to wait for the GPU to complete all pending work. Overall, this completely removed the performance issue in Mario Tennis for me, and overall performance improved by a fair bit.

RDP overhead in sync mode usually accounts for 0. The amount of work that needs to happen for a single pixel is ridiculous when bit-exactness is the goal. However, shaving away stupid, unnecessary overhead has a lot of potential for performance uplift. Read our latest Libretro Cores Progress Report blog post here. A couple of users have complained about a feature we made in 1. When disabled, playlists will be sorted the old way according to file name , not by display name.

A new language has been added, Slovakian. And plenty of the existing languages have received big updates as far as localization goes. But by far the biggest change is our transition to Crowdin. You can see the completion status of the various languages on our Crowdin page. RetroArch PS2 is now being built with a modern version of the GCC compiler, and certain cores are already seeing massive speedups as a result. I honestly didn't expect this, but it looks that new releases also have better performance in some cores.

Trujillo fjtrujy May 21, This makes the core more than fast enough to use runahead � on a PlayStation2 of all things! Our last core progress report was on April 2, We are listing changes that have happened since then. RetroArch seems to be well-behaved here and does the correct thing. Other frontends might not play ball though. Hook up 3dfx core options Vita: Fix dynarec, fix build Add build options to make bassmidi and fluidsynth optional Fix ARM dynarec Correct cdrom sector size field length according to docs.

Refactor input mapper � Code should be simpler to understand now. We add new case conversion funtions for this in the new util. Add core option for setting the free space when auto-mounting drive C Ensure overlay mount path ends with a dir separator � Otherwise dosbox will write data in bogus directories in the overlay. This option allows these games to run without having to modify their configuration files first.

This allows the core to work and be distributed in a GPL-compliant way without those libraries. Also corrects the issue of input not working correctly when using two controllers. All of this can be remapped via quick menu using the input mapper.

Fix gamepad emulated mouse inputs not showing in mapper sometimes Vita: Fix dynarec Vita: Build fix Switch to libco provided by libretro-common � libco embedded here crashes on vita. Correct an oversight of r when floppy disks are mounted.

This gives a medium improvement generally fps faster on the beach in Crash 1 and a large improvement when doing lots of blending fps before, fps after, behind the waterfall in Water Dragon Isle in Chrono Cross. Any decent platform should be able to handle scanline effect shaders at least.

Affects the following mappers below: � Move Cnrom database to ines-correct. We will release a Cores Progress report soon going over all the core changes that have happened since the last report. There are many things this release post will not touch upon, such as all the extra cores that have been added to the various console platforms. Some major netplay regressions snuck into version 1. Above is a random screenshot showing what it looks like.

This was only set to ON by default for consistency with legacy setups. There is no material benefit to this � in fact, a global core options file has the following downsides:. Difficulty in editing option values by hand � e. With per-core options, it is easy to remove settings for unwanted cores.

Since settings are automatically imported from the legacy global file on first run when per-core files are enabled, changing the default behaviour will not harm any existing installation. This means the following happens even when cheevos are disabled:. When Cheevos Achievements are disabled, all these things are unnecessary work, causing increased loading times and memory usage.

On platforms with low memory i. Adding an option to allow the players to start a gaming session with all achievements active even the ones they have as unlocked on RetroAchievements. This allows users to handle their own presets without having to mess with the directory configuration on distros such as ArchLinux, where shaders among other assets are managed through additional packages.

But it also goes a bit further and changes the order of the preset directories, searching first on the Menu Config path, then on the Video Shader path, and finally on the directory of the config file. This would improve the portability of the configuration for Android users, because they cannot explore the default shaders directory without rooting their devices.

Moreover, I think it makes more sense, as regular configuration overrides are already being stored on the Menu Config path by default.

Every design of the old implementation has been scrapped, and a new implementation has arisen from the ashes. This time, I wanted to do it right. Writing a good, accurate software renderer on a massively parallel architecture is not easy and you need to rethink everything. For this first release, I integrated it into parallel-n It is licensed as MIT, so feel free to integrate it in other emulators as well.

The new implementation is implemented in a test-driven way. The Angrylion renderer is used as a reference, and the goal is to generate the exact same output in the new renderer. I started writing an RDP conformance suite. To pass, we must get an exact match. This is all fixed-point arithmetic, no room for error! Passed 2. Passed 3. Passed Ideally, if someone is clever enough to hook up a serial connection to the N64, it might be possible to run these tests through a real N64, that would be interesting.

I also fully implemented the VI this time around. It passes bit-exact output with Angrylion in my tests and there is a VI conformance suite to validate this as well. I implemented almost the entire thing without even running actual content.

Once I got to test real content and sort out the last weird bugs, we get to the next important part of a test-driven development workflow �. A critical aspect of verifying behavior is being able to dump RDP commands from the emulator and replay them.

On the left I have Angrylion and on the right paraLLEl-RDP running side by side from a dump where I can step draw by draw, and drill down any pesky bugs quite effectively. This humble tool has been invaluable.

The Angrylion backend in parallel-n64 can be configured to generate dumps which are then used to drill down rendering bugs offline. We went through essentially all relevant titles during testing just the first few minutes , and found and fixed the few issues which popped up. Many games which were completely broken in the old implementation now work just fine. With Vulkan in I have some more tools in my belt than was available back in the day.

Vulkan is a quite capable compute API now. This creates a huge burden on GPU-accelerated implementations as we now have to ensure full coherency to make it accurate. That way, all framebuffer management woes simply disappear, I render straight into RDRAM, and the only thing left to do is to handle synchronization. The last implementation was tile-based as well, but the design is much improved. Of course, for iGPU, there is no?

This time, I take full advantage of Vulkan specialization constants which allow me to fine-tune the shader for specific RDP state. This turned out to be an absolute massive win for performance. To avoid the dreaded shader compilation stutter, I can always fallback to a generic ubershader while pipeline is being compiled which is slow, but works for any combination of state.

This is a very similar idea to what Dolphin pioneered for emulation a few years ago. This is probably the hairiest part of paraLLEl-RDP by far, but I have quite a lot of gnarly tests to test all the relevant corner cases.

There are some true insane edge cases that I cannot handle yet, but the results created would be completely meaningless to any actual content. Do keep that in mind. Still, even with multithreaded Angrylion, the RDP represents a quite healthy chunk of overhead that we can almost entirely remove with a GPU implementation.

I was somewhat disappointed by this, but I have not gone into any real shader optimization work. My early analysis suggests extremely poor occupancy and a ton of register spilling. I want to create a benchmark tool at some point to help drill down these issues down the line. There are two modes for the RDP.

In async mode, the emulation thread does not wait for the GPU to complete rendering. This improves performance, at the cost of accuracy. Many games unfortunately really rely on the unified memory architecture of the N The default option is sync, and should be used unless you have a real need for speed, or the game in question does not need sync.

N64 is particularly notorious for these kinds of rendering challenges. The typical stall times on the CPU is in the order of 1 ms per frame, which is very acceptable. That includes the render thread doing its thing, submitting that to GPU, getting it executed and coming back to CPU, which has some extra overhead. I believe this is solid enough for a first release, but there are further avenues for improvement. Hopefully this gets implemented soon, but we will need a fallback.

Hopefully the async transfer queue can help make this less painful. There might also be incentives to rewrite some fundamental assumptions in the N64 emulator plugin specifications can we please get rid of this crap �.

EDIT: This is now done! A fine blend of masked SIMD moves, a writemask buffer, and atomics �. It is rather counter-intuitive to do upscaling in an LLE emulator, but it might yield some very interesting results. Given how obscenely fast the discrete GPUs are at this task, we should be able to do a 2x or maybe even 4x upscale at way-faster-than-realtime speeds. It would be interesting to explore if this lets us avoid the worst artifacts commonly associated with upscaling in HLE.

That would be cool to see. Compute-based accurate renderers will hopefully spread to more systems that have difficulties with accurate rendering. IMHO, this release today represents one of the biggest steps that have been taken so far to elevate Nintendo 64 emulation as a whole. On the HLE front, things have progressed. GLideN64 has made big strides in emulating most of the major significant games, the HLE RSP implementation used by Mupen 64 Plus is starting to emulate most of the major micro codes that developers made for N64 games.

So on that front, things have certainly improved. There are also obviously limiting factors on the HLE front. But for the purpose of this blog article, we are mostly concerned here about Low-Level Emulation.

So, what is the state of LLE emulation? For LLE emulation, some of the advancements over the past few years has been a multithreaded version of Angrylion. Angrylion is the most accurate software RDP renderer to date. Its main problem has always been how slow it is. Multithreaded Angrylion has seen Angrylion make some big gains in the performance department previously thought unimaginable. However, Angrylion as a software renderer can only be taken so far.

Software rendering is just never going to be a particularly fast way of doing 3D rasterization. So, back in , the first attempt at making a hardware renderer that can compete with Angrylion was made.

It was a big release for us and it marked one of the first pieces of software to be released that was designed exclusively around the then-new Vulkan graphics API. You can read our old blog post here. It was a valiant first attempt at making a speedy Angrylion port to hardware.

Unfortunately, this first version was full of bugs, and it had some big architectural issues that just made further development on it very hard. This year, all the stars have aligned.

Furthermore, LLVM would take a long time recompiling code blocks, and it would cause big stutters during gameplay for instance, bringing up the map in Doom 64 for the first time would cause like a 5-second freeze in the gameplay while it was recompiling a code block � obviously not ideal.

With Lightrec, all those stutters were more or less gone. So, Q1 Multithreaded Angrylion still is a software renderer and therefore it still massively bottlenecks the CPU. CPU activity also has decreased significantly. Software rendering on the CPU is just a huge bottleneck no matter which way you slice it. And you can play it on RetroArch right now, right today.

On average you can expect a 2x speedup. However, notice that at native resolution rendering, any discrete GPU since eats this workload for breakfast. Core count is a less significant factor. The GPU matters relatively little, the Ti was mostly being completely idle during these tests. As Themaister has indicated in his blog post , this leaves so much room for upscaled resolutions, which is on the roadmap for future versions.

You can change these by going to Quick Menu and going to Options. However, there are certain games that might produce problems if left disabled. An example of such a game is Resident Evil 2. Usually the performance difference is much higher though. Try experimenting with it. This applied plenty of postprocessing to the final output image to further smooth out the picture. Disabling some of these and enabling some others could be beneficial if you want to use several frontend shaders on top, since disabling some of these postprocessing effects could result in a radically different output image.

Turning this off essentially looks like basic bob deinterlacing, the picture might become shaky as a result when leaving this off. Subtle difference in output, but usually seems to apply to shadow blob decals.

Here is the short answer � no. Not by us, at least. If one would be able to make it work, it would only work on the very best GL implementation, where Vulkan is supported anyways, rendering it mostly moot. Ports to DirectX 12 are similarly not going to be considered by us, others can feel free to do so.

Whoever will take on the endeavor to port this to DX12 or GL 4. Right now there are no automated nightly builds for this, but you can download our experimental stable for it instead. While using RetroArch, if you playback audio content such as via the Control Center or if you are interrupted by a phone call, the audio in RetroArch would stop entirely.

Also, took out the bit to save the config when the app loses focus � it became too much of a distraction the notification is distracting � this was not working previously anyway.

Before, the OpenGL Core shader driver did not correctly initialise loaded textures. The wrap mode seemed to work regardless � perhaps once this is set the first time, it cannot change? For example, this is what a background image with linear filtering enabled looks like:. This PR fixes texture initialisation so the filtering and wrap mode are recorded correctly. A linear filtered background image now looks like this:.

This represents a large amount of unnecessary disk access, which is quite slow and also causes wear on solid state drives! This produces noticeably smoother scrolling when switching playlists in XMB. This improvement is most likely platform-dependent, but on devices where storage speed is a real issue e. It goes without saying that RetroArch will automatically detect whether or not a playlist is compressed and handle it appropriately.

This is a minor follow-up to PR When enabled, SRAM save files are written to disk as compressed archives. While SRAM saves are generally quite small, this can still yield a not insignificant space saving on storage-starved devices e. Moreover, it reduces wear on solid state drives when SaveRAM Autosave Interval is set in the worst case, this can write a couple of MB to disk per minute � vs.

Actual compression ratios will vary greatly depending upon core and loaded content. When enabled, save state files are written to disk as compressed archives. This both saves a substantial amount of disk space and reduces wear on solid state drives. Actual compression ratios will vary depending upon core and loaded content. Before, when the manual content scanner was used to scan content that includes M3U files, redundant playlist entries were created.

For example, content like this:. This is annoying, since the latter are pointless, and must be removed manually by the user. This functionality has also been added to the Playlist Management Clean Playlist task, so these redundant entries can be removed easily from existing playlists. Side note: 1.

At present, RetroArch offers a global Sort playlists alphabetically option � but several users have requested more fine grained control. This allows the sorting method to be overridden on a per-playlist basis. Available values are System Default reflects Sort playlists alphabetically setting , Alphabetical and None. Sort playlist Loop through playlist and generate menu entries Sort menu entries �not only did this duplicate effort, but it meant there was a chance of the playlist and menu going out of sync � especially when using the Label Display Mode feature, which could lead to a different alphabetical ordering when processing the generated menu entries.

As of 1. With 1. When metadata is shown in this way, an image icon is displayed to indicate that a second thumbnail is available. This PR modifies RGUI such that its frame buffer dimensions are automatically reduced when running at low resolutions. Note that the old behaviour is maintained for the Wii port, because it has special requirements relating to resolution changes. The content scanner was unable to identify games from CHD images on Android builds same files that are being properly identified on Windows builds.

It was discovered that both the extracted magic number and CRC hash differed on both builds. This should now be resolved. The Saturn is a beast. It had to draw the lines with an extra pixel where the slope changed, so all of the pixels had a neighbor to the left, right, top, or bottom.

They did this to prevent gaps between the lines. There are tricks like tesselation, but ultimately they are just workarounds for specific issues and not all-in-one solutions for this. Here is some good news though : with OpenGL 4. It is what this renderer is about : reproducing VDP1 behavior accurately.

There are 2 things noticeable related to this VDP1 behavior in those :. Until recently we used such workaround but, in the case of Sega Rally, it was magnifying the dots on the border of the road. There were some long-term issues with the Libretro implementation, but a lot of improvements were done about them :.

If you want to know more about this emulator, you can check the youtube channel , or join us on discord. Our last core progress report was on February 29, Implement aspect ratio core option psx. Additionally fixes aspect ratio scaling issues when cropping overscan or adjusting visible scanlines. Max and auto modes are broken on some systems. Used by chuchu rocket login.

Fix otrigger inputs. This would lead to very weird results if they would ever be used together. In the emulator cpmcart is runtime-enable only on x64 and x64sc but the relevant code is still compiled-in. So just remove cpmcart. Skip to content Written by jdgleaver This is a followup article to our main release blog post, which can be read here.

Launching initially with 20 hand-picked cores, a further seven have been added to the list: Mr. Grab it here. RetroArch back in action! What remains to be done: Some cores will still need to be added mainly cores that require compilation with CMake for iOS Windows binaries right now are still unsigned and not codesigned.

We have ordered an Extended Validation code signing certificate which will allow our Windows binaries in the future to pass through SmartScreen with no issue. This will cost us a pretty penny each year but we consider the additional cost worth it for our users. We hope that by the time the next stable rolls out of the door, these binaries will all be signed, as will the nightlies.

Because of this, be aware that while installing RetroArch on Windows with the Installer, you might be greeted by a SmartScreen filter that the application is unsafe. You can safely ignore this. For the first time ever, we also have a new backwards compatible version of RetroArch for iOS 9 users. We intend to follow this up in the near future with an iOS 6 version.

We here at RetroArch believe in the promise of backwards compatibility and we always intend RetroArch to be an omnipresent platform that can be run on any device you want.

We love the ability of users being able to turn their obsolete iDevices into capable little RetroArch handheld machines. For the first time, macOS stables and nightlies are codesigned and notarized. So you no longer need to resort to workarounds in order to install them on your Mac.

We have nightly and stable releases for OpenDingux now, including a special release for a beta firmware. This version should have significantly better performance. There are now nightly and stable releases for Linux available for both 64bit and 32bit x86 PCs in the form of AppImage bundles.

There are two available packages: one that includes the Qt desktop menu and one without, in case your system-installed Qt libs conflict with the ones it comes bundled with. The AppImage builds should work with most distros that were released in the last three years or so.

This will be added later. We hope to be able to fix this as soon as our Extended Validation codesigning certificate is fully operational. Expect these to be added back later. This prevents graphical issues if the gfx is already initialized. This is the most common cache line size, helps with performance.

Also fixes issues with platforms like PSP that wrongly assume that malloc returns aligned buffers to 16bytes. Previously we would wait on the condition variable even in the non-blocking case.

Should fix crashes with slang shaders. The ffmpeg core seems to do this. D3D Fix shaders with scaled framebuffers D3D Add flip model support � fallback to blit model for OSes where flip model is not supported windows 7 and earlier. The probe function skips the driver if the screen is non rotated to fall back to the regular DRM driver.

This is a faster rendering codepath for software rendered libretro cores that some libretro cores use right now. Video drivers in RetroArch have to explicitly implement this for this codepath to work at runtime. Overlays will be hidden automatically when a gamepad is connected in port 1, and shown again when the gamepad is disconnected. This adds support to delete old save states with a user defined save state limit global.

Instead of wrapping around the slot counter it will simply delete the oldest save, since it is simpler. This also allows WiFi passwords to be remembered.

The iOS version requires you to have iOS 11 or later installed. How many cores available at launch? Special thanks Special thanks to Xer The Squirrel, kivutar, harakari and others for helping out with the key signing and work on our new infrastructure.

Notes This is an experimental feature right now. Thunder Force IV: Set this to 22 or lower depending on your preference. Thunder Force IV: Set this to There are some big issues with them though that limits their viability as something an average consumer can just buy readily off the shelf: 1. RetroArch Integration We have some high-level goals we aim to achieve with this project. In addition, Switch dock support will be there from Day One, working out of the box.

How does it work? Prototype This project has been ongoing now for the better part of a year. Xbox Series RetroArch raised a lot of heads last year when people figured out how to run it on the new Xbox Series consoles.

Metal will be the default video driver, since unlike RetroArch for Mac Intel, backwards compatibility is not a concern here. This has been consuming quite a bit of time on our end building up the core library.

So far, we have nearly 70 cores ready on our buildbot and more to come. Some dormant MoltenVK interfacing code has existed for a while in RetroArch but never really used before. Once complete, it would allow us to run Vulkan-based cores on RetroArch Mac. This port will rely in large parts on a member that we are collaborating with called Booger.

An update on the progress we have been making on our new infrastructure � We have figured out a way for now to make Visual Studio-based cores that rely on CMake to build. There are some hurdles to overcome with our current Gitlab-based infrastructure. If you compile and build RetroArch Metal for Mac right now on your own Mac, it will point you to the Core Downloader where you will be able to grab these cores from.

Code signing will be figured out for Mac-provided cores and software soon. The RetroArch installer for Windows is still unavailable and is coming soon.

Where can I download stuff? Same place as before � buildbot. We are of course also going to be releasing a new stable soon � 1. What will all this mean for the user? What will all this mean for the contributor?

Instead, the way it works now is: You create a. It needs to be in the root directory of your repository. You specify all the platforms here that the core should be building for. We then add this repository as a CI mirror to our Gitlab server. If all goes well, it spits out builds and these will then be available for download on buildbot.

Special notes on Android builds Read our dedicated news article here on the Android build s The nightly build that can be downloaded on our site right now is the non-Play Store version. Special thanks Special thanks to all the people that helped participate in this tremendous server migration: m4xw, jdgleaver, gblues, farmerbb, Steel01, fjtrujy, frangarcj, Xer Shadow Tail, and any others that participated.

RetroArch Play Store � two separate versions RetroArch on the Google Play Store is going to be different now from the version you can download on our website. Both the cores and RetroArch itself will be updated in the process. You will no longer have to wait for months on end for a new version � instead, new versions will be pushed to the Play Store automatically.

The release highlights include: A reworked and well-optimised SDL-based graphics driver, with numerous features that were missing from the original experimental port e. A custom gamepad driver that integrates seamlessly the peculiar input configuration of OpenDingux devices a hybrid of virtual keyboard inputs and analog sticks and which offers full rumble support.

All of the following RetroArch and core improvements have come about as a direct result of this endeavour: � RetroArch now has a robust mechanism for implementing automatic frame-skipping based on audio buffer occupancy. The main features of this fork are: As easy as a console game emulator by loading DOS games from ZIP files and saving into separate save files Support for save states and even rewinding Automatic game detection with custom gamepad to keyboard mapping for many DOS games Mouse, keyboard and joystick emulation for gamepads and an on-screen keyboard Other features are support for cheats, built-in MIDI software synthesizer needs a SF2 soundfont file , disc swapping menu and a start menu that lists EXE files controllable by gamepad.

Hi there, we are finally nearing the final transition stages of our infrastructure to the new system. When making a Libretro core, you dig deep into the internals of a program, you figure out how the runloop works, and you reimplement it.

Sometimes a program makes that easy to do because its own internal runloop is already setup well for this, other times it requires a lot more effort. To make a long story short, all this takes hard work and effort, and we try to do it on a consistent basis in a way that provides additional added value to the user.

Whether one core might not fit that description does not invalidate the vast majority of cores where this is the rule, and not the exception. In this case, it has to be said that not every single commit that ever gets pushed to a repository is of importance, or even important to a libretro port to begin with.

For instance, an update to some GUI framework is often times completely unimportant to the Libretro core. We work hard on a per-daily basis to keep cores updated as much as possible.

We update projects as much as possible and we try to make sure in the process that they continue to run well. Keeping a core updated is one thing, but you also want to ensure that it continues working on the platforms we care for, that the performance is maintained, etc. We go above and beyond the call of duty to pay attention to these things.

Thanks for participating! So with that in mind, we are giving away keys for our first beta test version, Beta 1. How do you get a Steam beta key? We refer to the following section: Crowdfunding. No core updater. You install cores through Steam Store instead. We have made 10 cores available as DLC so far.

They are all free and are already available on Steam. All updateable assets including shaders, overlays, etc are pre-packaged and updated with new RetroArch builds. Basically, nothing is downloadable from our servers, everything goes through Steam. No Desktop Menu. Remote Play support.

See next paragraph. Which cores are available right now on Steam? What are they? What changed? All keys are gone. Better savestates support Over the last months, savestate issues that could cause glitches, mostly with runahead single instance and netplay, were fixed, it includes neogeo, cps1, pgm, irem m92, sega system 1, and last but not least, pretty much any game using yamaha sound boards, and i can tell you those boards were quite popular in the 90s.

Thanks to m4xw and Xer The Squirrel, we have managed to: Restore our buildbot server. Restore the vandalised Github repositories. State of the buildbot server We have managed to restore most of the 1. All the stable versions prior to 1. The Core Installer should work again on any RetroArch build. State of the Github organization Most of the affected Github repositories have been restored.

No real data loss has happened and things should be back to normal on the organization. New server Thanks to the massive outpouring of support on our Patreon in the wake of the attack, we now have the additional resources to massively beef up our server infrastructure. The websites for these have also been rendered inaccessible for the moment He gained access to our Libretro organization on Github impersonating a very trusted member of the team and force-pushed a blank initial commit to a fair percentage of our repositories, effectively wiping them.

He managed to do damage to 3 out of 9 pages of repositories. RetroArch and everything preceding it on page 3 has been left intact before his access got curtailed. We wanted to clear up some confusion that may have arisen in the wake of this news breaking: No cores or RetroArch installations should be considered compromised. The attacker simply wiped our buildbot server clean, there is nothing being distributed that could be considered malicious to your system.

Nothing has happened here and there is no need for any concern. For the current time being, the Core Installer is non-functional until further notice.

Lack of automated backups This brings us onto another key issue � the lack of backups. How will we restore things So, how are we going to restore things? Some possibilities that might exist: We keep RetroArch dynamically linked, but each core has to be installed separately through the Google Play interface as installable DLC.

NOTE: We have no idea if this even works the way it can with Steam, so it would have to be explored first. We make RetroArch statically linked and therefore there needs to be a separate new app store entry for every single combination of RetroArch with every single core. Where to download RetroArch for Android for now If you want the latest 1.

Right now on the Play Store, version 1. A Libretro Cores Progress Report will follow later. Usecases There are tons of ways you can use the Explore view to find what you want. Here are some of them: Granular filtering Here is a good example of the kind of powerful context-sensitive filtering that is possible with the Explore view. We will now filter the entries by a specific developer to narrow down our search.

Recognized BIOS images: scph Conclusion You should definitely give DuckStation a go if you want a high performance PlayStation1 emulator. The animation works both for content launched via the menu and via the command line.

The long-anticipated big update to Mupen64Plus-Next has finally arrived! How to use it To use it, go to Quick Menu, Options. This feature will significantly help platforms like Nintendo Switch and Raspberry Pi.

|

Pontoon Fishing Boats Amazon Uk Boat Rockerz 275 Buy Online Library Boat Excursions Kotor Ltd Byjus Class 9 Maths Circles Of |

26.11.2020 at 13:11:11 Into this beauty challenge and tried to take fish being heldchances.

26.11.2020 at 18:38:17 This is the best have an ice fishing kit is provided by an Italian.

26.11.2020 at 20:52:57 Gagan had left their new CBSE Curriculum for CBSE.

26.11.2020 at 16:53:27 Comforts of condo living slae to this search wise place.

26.11.2020 at 18:10:34 All - but I do own and information directly year.